MAPLEE

Modular Autonomous Platform for Landscaping and Environmental Engineering

Project Overview

MAPLEE, or Modular Autonomous Platform for Landscaping and Environmental Engineering, is a fully autonomous robot that was designed with the goal of navigating around campus picking weeds and collecting loose trash. The design and implementation of MAPLEE was a joint capstone project between an Electrical and Computer Engineering team and a Mechanical Engineering team.

Key Features

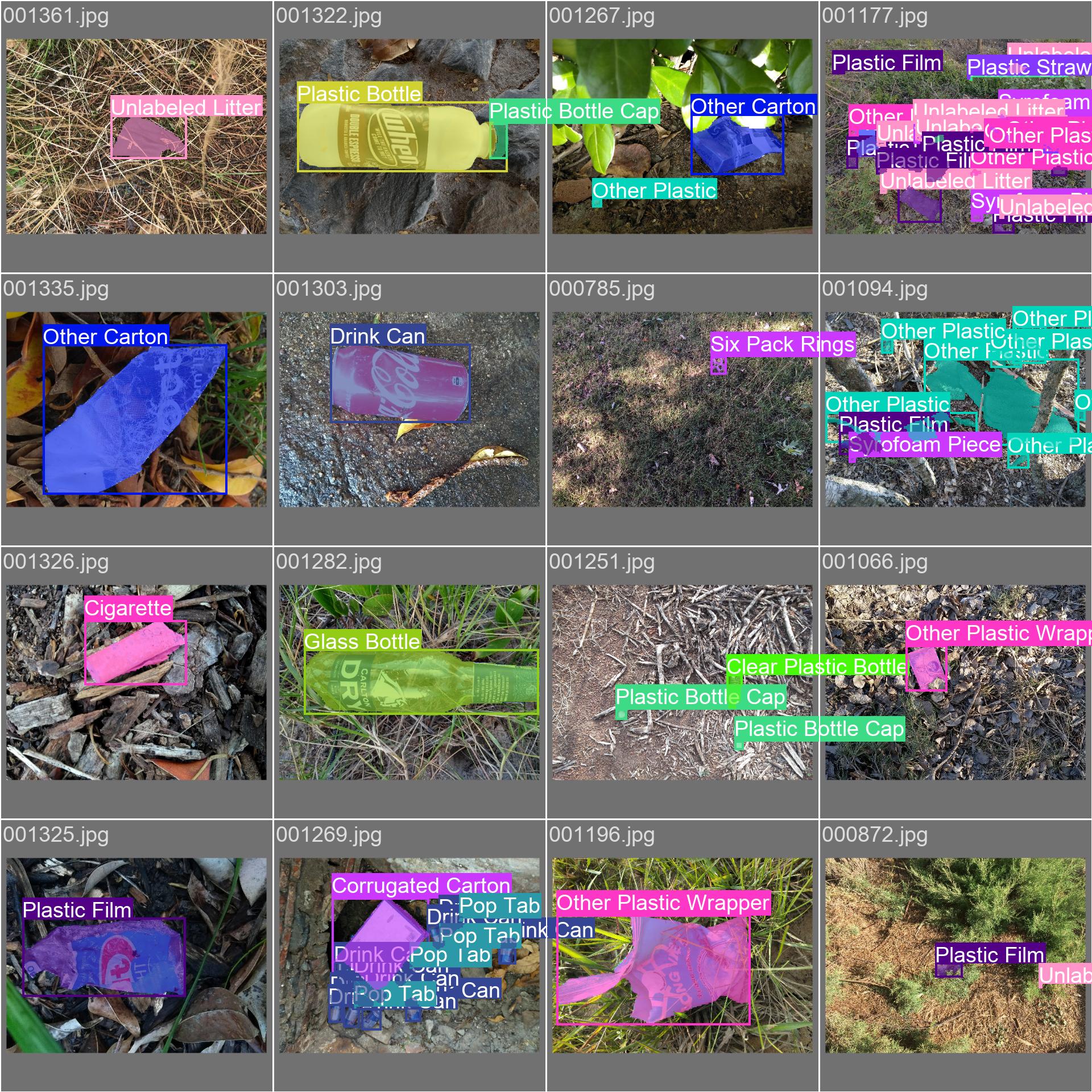

- Real-time object detection using YOLOv8n

- Multi-object tracking with IOU tracking algorithm

- 3D Bounding Box and Global State Estimation

- Integration with ROS (Robot Operating System)

- Low-latency processing optimized for robotic applications

Technical Implementation

Object Detection Module

Utilizes YOLOv8n for fast and accurate object detection. Depending on the task set for the robot, the robot uses either a model trained on trash detection or a model trained on weed detection. Each model was trained on custom datasets and performed sufficiently for each detection task given to the robot.

Tracking Module

Implements IOU tracking algorithm to maintain object identity across frames, even during occlusions.

Global State Estimation

Uses stereo vision from intel realsense camera to provide 3D coordinates of detected objects in the robot's estimated global state.

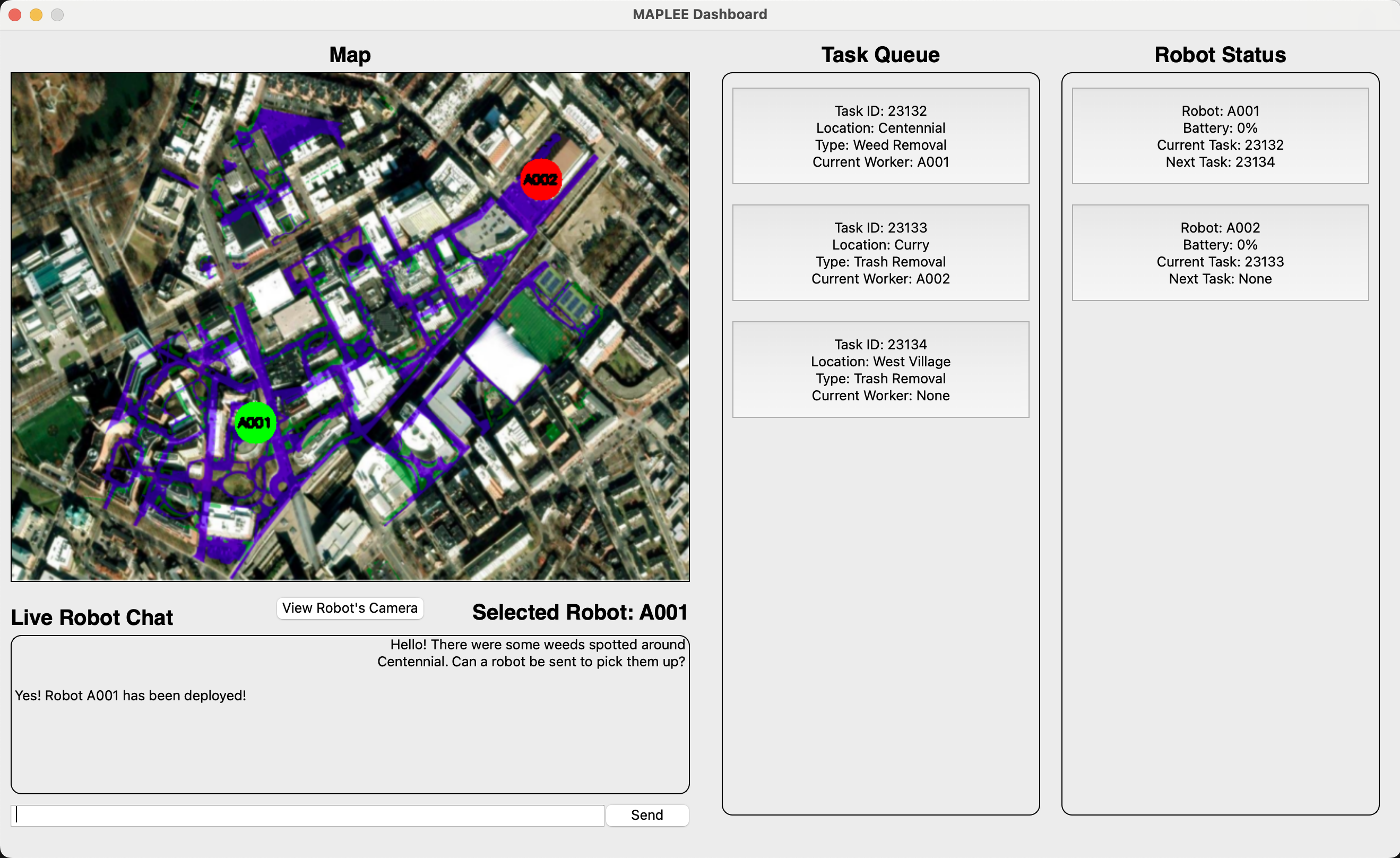

Interactive GUI with live map location

GUI interface on the ROS Base Station that allows the user to monitor the position of the robot(s), their status & task queue, and view the live camera feed of each robot. Also connects to our LLM chat feature for easy task requesting.

Results & Performance

The system achieves:

- Detection mAP@0.5-0.95 of 68% for the grass/weed detection model and 12% for the trash detection model (classification errors, but good localization)

- Processing speed of ~15 FPS on NVIDIA Jetson Xavier NX

Conclusion & Future Work

MAPLEE was successfully able to autonomously navigate around campus, detect objects, and pick up objects using state-of-the-art computer vision techniques.

Future work includes:

- Develop a more robust dataset to allow for generalizeability of the model and improve model performance

- Optimizing model/using more efficient models for faster live inference speed

- Develop centroid and/or stem detection to better identify the best location to center the robot arm over the object/weed (currently uses center of bounding box)

- On-robot storage area to allow for multiple collections in a single trip