Object Detection

A variety of object detection models for different applications

Overview

Below are a few of the object detection models I've implemented and used in the past. There are a variety of state of the art object detection models, developing custom implementations in some cases. Some models were trained on custom datasets, develoepd for the specific task of the project and have been implemented on a variety of robotic platforms.

Key Features

- Real-time object detection

- Custom implementations

- Custom datasets

- Custom models for specific tasks

- Integration with ROS (Robot Operating System)

Technical Implementation

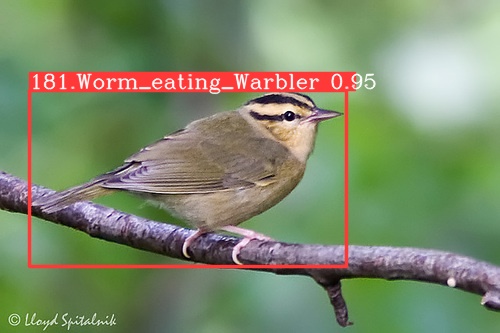

Bird Detection and Classification Model

Specialized YOLOv6 model trained on the Caltech-UCSD Birds dataset. Achieves high accuracy in detecting birds in natural environments.

Weed Detection Model

Custom SSD (Single Shot Detector) model designed for MAPLEE (Modular Autonomous Platform for Lanscaping and Environmental Engineering) trained on a custom blend of datasets containing 8,000+ labeled images. Identifies weeds in environments such as grassy fields or brick walkways, enabling MAPLEE to accurately detect and pull out weeds across the Northeastern campus.

Trash Detection Model

Fine-tuned YOLOv8n model for environmental monitoring and waste management. Detects various types of litter and debris, supporting MAPLEE and CARL-T (Compact Autonomous Robot for Locating Trash) in locating trash and autonomously collecting it across the Northeastern campus. Despite the low mAP@0.5-0.95, the model was able to detect trash with a high degree of accuracy, the score was decreased due to classification errors and was not recalculated.

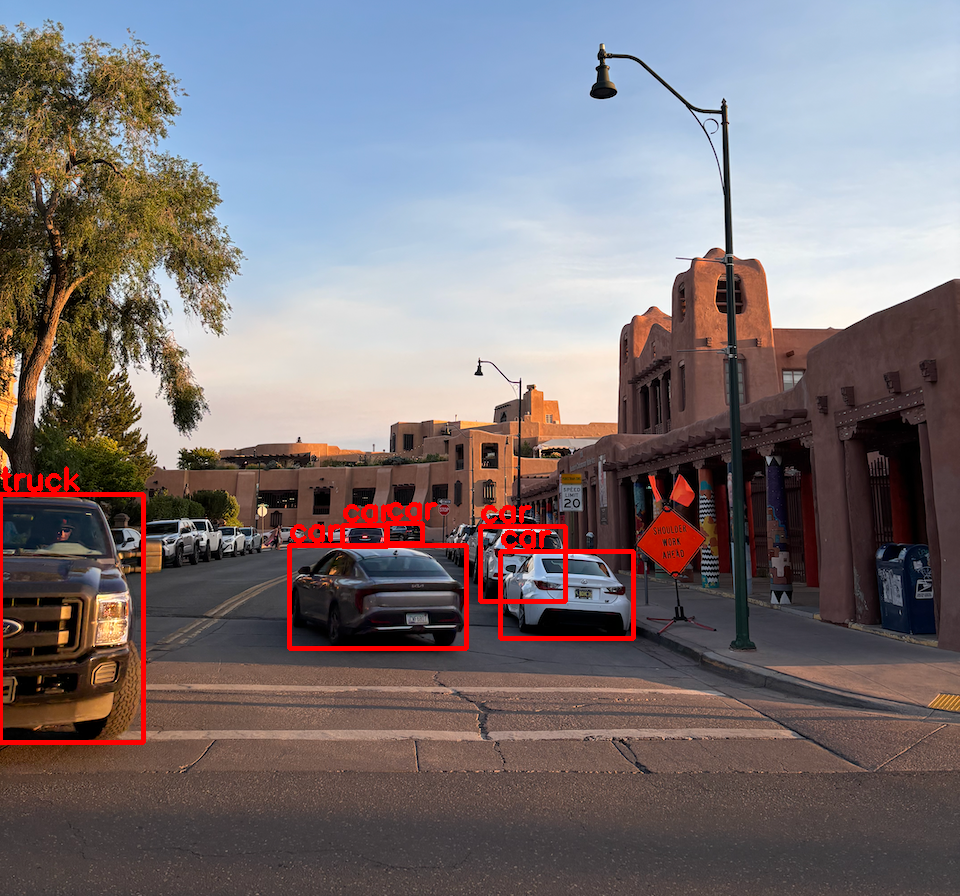

General Object Detection

Versatile YOLO-12x model for general-purpose object detection. Handles a wide range of everyday objects and scenarios, serving as a fallback when specialized models aren't applicable. Covers 80+ COCO classes with a 0.6 confidence threshold, making it suitable for general computer vision applications and research.

Applications

These models have been successfully deployed on a variety of robotic platforms and applications:

MAPLEE

Autonomous robot for detecting and cleaning weed and trash across the Northeastern campus. Runs with a Jetson Xavier NX with ROS Noetic

CARL-T

Compact, cheaper helper robot for locating trash across Northeastern's campus and labeling it's location for MAPLEE to clean. Runs with a Raspberry Pi 4 with ROS Noetic.

Bird Identification App

WIP

Conclusion & Future Work

These modles all have been developed and applied to various robotics platforms and applications and have performed sufficiently for their tasks.

Future work includes:

- Using later SOA models for more accurate and faster object detection

- Using larger models for bird detection and classification for higher accuracy, especially without the need for high inference speed for the application.

- Developing a more efficient model for deployment on resource-constrained devices

Try It Yourself

Upload an image to see our intelligent model selection in action. The system uses OpenAI's GPT-4 Vision to analyze your image and automatically choose the most appropriate detection model.